🔢 Quantifying the impact of AI recommendations on clinical decision making, AI safety and quality in healthcare, and more

30th November, 2023

Kevin Sam

3 min read

Hiya 👋

We’re back with another edition of the digital pharmacist digest!

Here are this week's links that are worth your time.

Thanks for reading,

Kevin

📖 What I'm reading

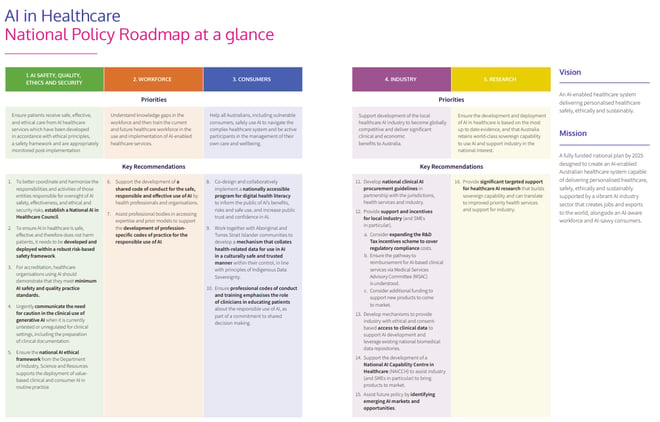

A National Policy Roadmap for Artificial Intelligence in Healthcare

🤖 Artificial Intelligence and 💊📈 Patient Safety

"Patients must receive safe, effective, and ethical care from AI-enabled healthcare services and be assured sensitive healthcare data are protected from cybersecurity threats, privacy breaches or unauthorised use"

"Effective healthcare AI governance will require a more unified and comprehensive approach. There are significant gaps and overlaps in remit which can only be identified through inter-agency collaboration, and targeted resourcing is needed to address emerging challenges. While clinical AI is subject to TGA software as a medical device (SaMD) safety regulation, non-medical generative AI like ChatGPT falls into a grey zone, where it is being used for clinical purposes but evades scrutiny because they are general purpose technologies not explicitly intended for healthcare. Uploading sensitive patient data into a non-medical AI like ChatGPT hosted on United States servers is also problematic from a privacy and consent perspective.

The current model for regulating AI was designed over 30 years ago in an environment where technology was single function and predictable. Today, governance needs to move from a ‘certify once’ model to one which ensures adaptive AI remains fit for purpose as it evolves. Localisation of AI models by health services will be a common strategy to deal with data biases, and governance is needed to ensure local AI services function as expected."

Quantifying the impact of AI recommendations with explanations on prescription decision making

🤖 Artificial Intelligence and 🩺💻 Health Informatics

"The influence of AI recommendations on physician behaviour remains poorly characterised. We assess how clinicians’ decisions may be influenced by additional information more broadly, and how this influence can be modified by either the source of the information (human peers or AI) and the presence or absence of an AI explanation (XAI, here using simple feature importance).

We used a modified between-subjects design where intensive care doctors (N = 86) were presented on a computer for each of 16 trials with a patient case and prompted to prescribe continuous values for two drugs. We used a multi-factorial experimental design with four arms, where each clinician experienced all four arms on different subsets of our 24 patients. The four arms were (i) baseline (control), (ii) peer human clinician scenario showing what doses had been prescribed by other doctors, (iii) AI suggestion and (iv) XAI suggestion.

We found that additional information (peer, AI or XAI) had a strong influence on prescriptions (significantly for AI, not so for peers) but simple XAI did not have higher influence than AI alone. There was no correlation between attitudes to AI or clinical experience on the AI-supported decisions and nor was there correlation between what doctors self-reported about how useful they found the XAI and whether the XAI actually influenced their prescriptions. Our findings suggest that the marginal impact of simple XAI was low in this setting and we also cast doubt on the utility of self-reports as a valid metric for assessing XAI in clinical experts."

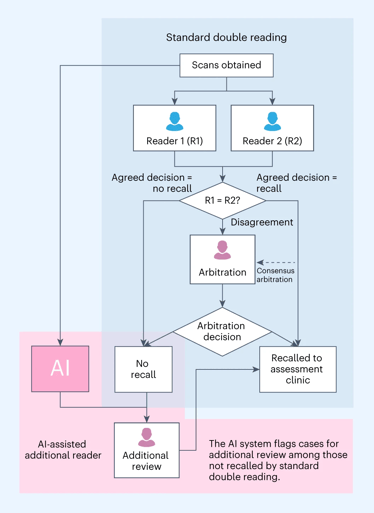

Prospective implementation of AI-assisted screen reading to improve early detection of breast cancer

🤖 Artificial Intelligence and 💊📈 Patient Safety

"Artificial intelligence (AI) has the potential to improve breast cancer screening; however, prospective evidence of the safe implementation of AI into real clinical practice is limited. A commercially available AI system was implemented as an additional reader to standard double reading to flag cases for further arbitration review among screened women."

"The results showed that, compared to double reading, implementing the AI-assisted additional-reader process could achieve 0.7–1.6 additional cancer detection per 1,000 cases, with 0.16–0.30% additional recalls, 0–0.23% unnecessary recalls and a 0.1–1.9% increase in positive predictive value (PPV) after 7–11% additional human reads of AI-flagged cases (equating to 4–6% additional overall reading workload)."

"This evaluation suggests that using AI as an additional reader can improve the early detection of breast cancer with relevant prognostic features, with minimal to no unnecessary recalls. Although the AI-assisted additional-reader workflow requires additional reads, the higher PPV suggests that it can increase screening effectiveness."

Are you enjoying this digest? It would mean a lot if you'd consider forwarding it on to someone that you think would also appreciate it!

Stay up-to-date with the Digital Pharmacist Digest

Any comments provided are personal in nature and do not represent the views of any employer